Have you ever wondered how we know who we’re talking to on the phone? It’s obviously more than just the name displayed on the screen. If we hear an unfamiliar voice when being called from a saved number, we know right away something’s wrong. To determine who we’re really talking to, we unconsciously note the timbre, manner and intonation of speech. But how reliable is our own hearing in the digital age of artificial intelligence? As the latest news shows, what we hear isn’t always worth trusting – because voices can be a fake: deepfake.

Help, I’m in trouble

In spring 2023, scammers in Arizona attempted to extort money from a woman over the phone. She heard the voice of her 15-year-old daughter begging for help before an unknown man grabbed the phone and demanded a ransom, all while her daughter’s screams could still be heard in the background. The mother was positive that the voice was really her child’s. Fortunately, she found out fast that everything was fine with her daughter, leading her to realize that she was a victim of scammers.

It can’t be 100% proven that the attackers used a deepfake to imitate the teenager’s voice. Maybe the scam was of a more traditional nature, with the call quality, unexpectedness of the situation, stress, and the mother’s imagination all playing their part to make her think she heard something she didn’t. But even if neural network technologies weren’t used in this case, deepfakes can and do indeed occur, and as their development continues they become increasingly convincing and more dangerous. To fight the exploitation of deepfake technology by criminals, we need to understand how it works.

What are deepfakes?

Deepfake (“deep learning” + “fake”) artificial intelligence has been growing at a rapid rate over the past few years. Machine learning can be used to create compelling fakes of images, video, or audio content. For example, neural networks can be used in photos and videos to replace one person’s face with another while preserving facial expressions and lighting. While initially these fakes were low quality and easy to spot, as the algorithms developed the results became so convincing that now it’s difficult to distinguish them from reality. In 2022, the world’s first deepfake TV show was released in Russia, where deepfakes of Jason Statham, Margot Robbie, Keanu Reeves and Robert Pattinson play the main characters.

Deepfake versions of Hollywood stars in the Russian TV series PMJason. (Source)

Voice conversion

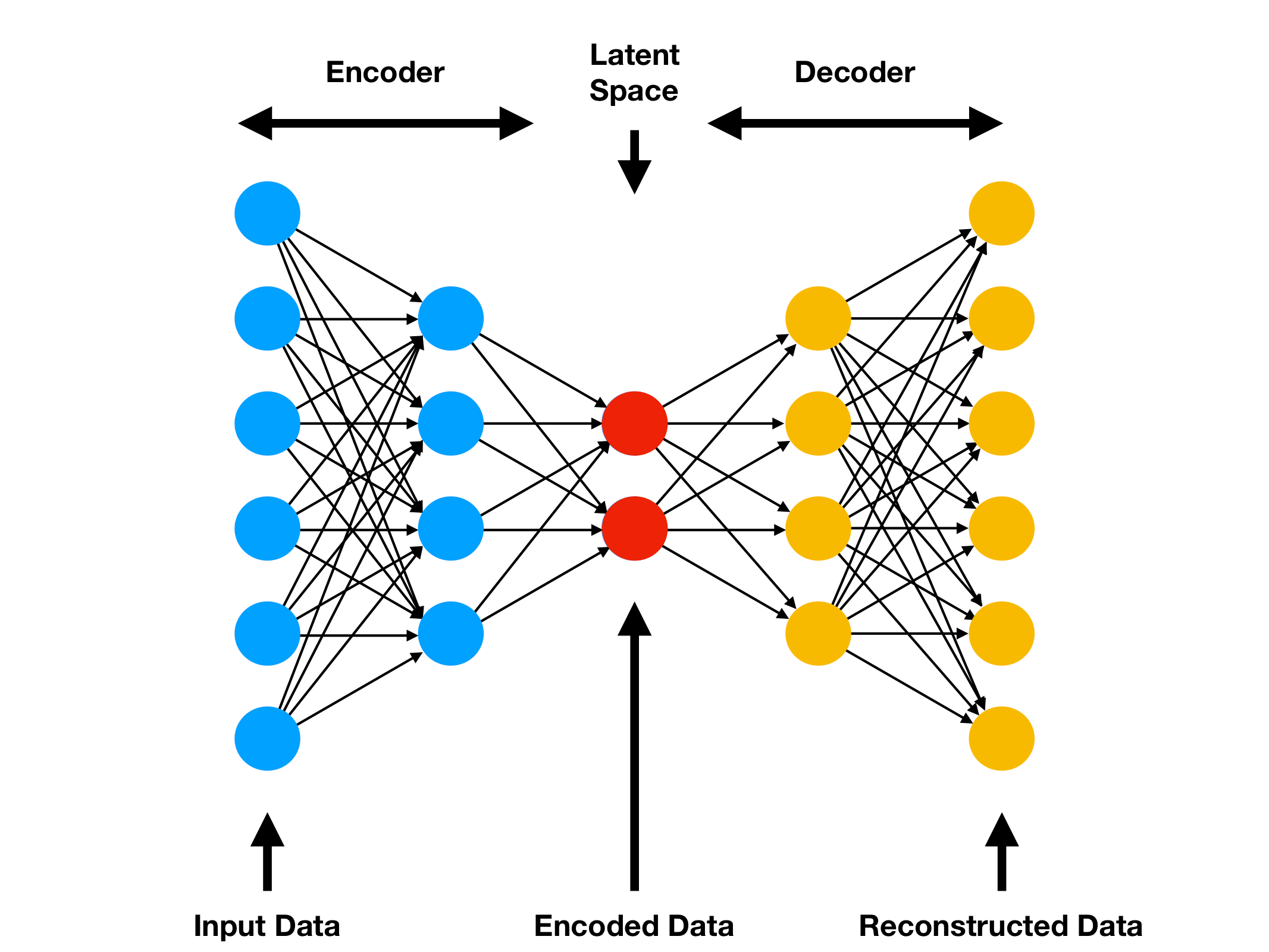

But today our focus is on the technology used for creating voice deepfakes. This is also known as voice conversion (or “voice cloning” if you’re creating a full digital copy of it). Voice conversion is based on autoencoders – a type of neural network that first compresses input data (part of the encoder) into a compact internal representation, and then learns to decompress it back from this representation (part of the decoder) to restore the original data. This way the model learns to present data in a compressed format while highlighting the most important information.

Autoencoder scheme. (Source)

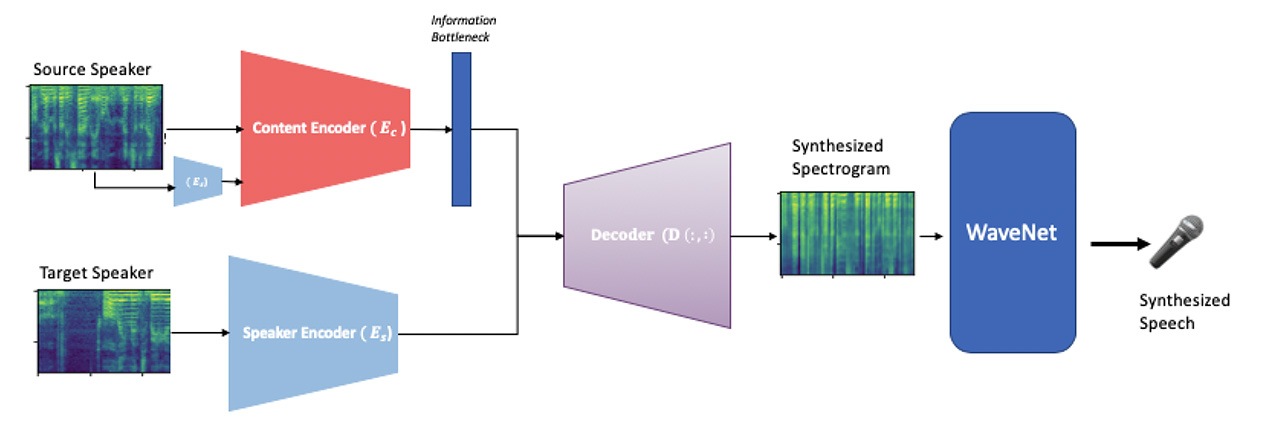

To make voice deepfakes, two audio recordings are fed into the model, with the voice from the second recording converted to the first. The content encoder is used to determine what was said from the first recording, and the speaker encoder is used to extract the main characteristics of the voice from the second recording – meaning how the second person talks. The compressed representations of what must be said and how it’s said are combined, and the result is generated using the decoder. Thus, what’s said in the first recording is voiced by the person from the second recording.

The process of making a voice deepfake. (Source)

There are other approaches that use autoencoders, for example those that use generative adversarial networks (GAN) or diffusion models. Research into how to make deepfakes is supported in particular by the film industry. Think about it: with audio and video deepfakes, it’s possible to replace the faces of actors in movies and TV shows, and dub movies with synchronized facial expressions into any language.

How it’s done

As we were researching deepfake technologies, we wondered how hard it might be to make one’s own voice deepfake? It turns out there are lots of free open-source tools for working with voice conversion, but it isn’t so easy to get a high-quality result with them. It takes Python programming experience and good processing skills, and even then the quality is far from ideal. In addition to open source, there are also proprietary and paid solutions available.

For example, in early 2023, Microsoft announced an algorithm that could reproduce a human voice based on an audio example that’s only three seconds long! This model also works with multiple languages, so you can even hear yourself speaking a foreign language. All this looks promising, but so far it’s only at the research stage. But the ElevenLabs platform lets users make voice deepfakes without any effort: just upload an audio recording of the voice and the words to be spoken, and that’s it. Of course, as soon as word got out, people started playing with this technology in all sorts of ways.

Hermione’s battle and an overly trusting bank

In full accordance with Godwin’s law, Emma Watson was made to read “Mein Kampf”, and another user used ElevenLabs technology to “hack” his own bank account. Sounds creepy? It does to us – especially when you add to the mix the popular horror stories about scammers collecting samples of voices over the phone by having folks say “yes” or “confirm” as they pretend to be a bank, government agency or poll service, and then steal money using voice authorization.

But in reality things aren’t so bad. Firstly, it takes about five minutes of audio recordings to create an artificial voice in ElevenLabs, so a simple “yes” isn’t enough. Secondly, banks also know about these scams, so voice can only be used to initiate certain operations that aren’t related to the transfer of funds (for example, to check your account balance). So money can’t be stolen this way.

To its credit, ElevenLabs reacted to the problem fast by rewriting the service rules, prohibiting free (i.e., anonymous) users to create deepfakes based on their own uploaded voices, and blocking accounts with complaints about “offensive content”.

While these measures may be useful, they still don’t solve the problem of using voice deepfakes for suspicious purposes.

How else deepfakes are used in scams

Deepfake technology in itself is harmless, but in the hands of scammers it can become a dangerous tool with lots of opportunities for deception, defamation or disinformation. Fortunately, there haven’t been any mass cases of scams involving voice alteration, but there have been several high-profile cases involving voice deepfakes.

In 2019, scammers used this technology to shake down UK-based energy firm. In a telephone conversation, the scammer pretended to be the chief executive of the firm’s German parent company, and requested the urgent transfer of €220,000 ($243,000) to the account of a certain supplier company. After the payment was made, the scammer called twice more – the first time to put the UK office staff at ease and report that the parent company had already sent a refund, and the second time to request another transfer. All three times the UK CEO was absolutely positive that he was talking with his boss because he recognized both his German accent and his tone and manner of speech. The second transfer wasn’t sent only because the scammer messed up and called from an Austrian number instead of a German one, which made the UK SEO suspicious.

A year later, in 2020, scammers used deepfakes to steal up to $35,000,000 from an unnamed Japanese company (the name of the company and total amount of stolen goods weren’t disclosed by the investigation).

It’s unknown which solutions (open source, paid, or even their own) the scammers used to fake voices, but in both the above cases the companies clearly suffered – badly – from deepfake fraud.

What’s next?

Opinions differ about the future of deepfakes. Currently, most of this technology is in the hands of large corporations, and its availability to the public is limited. But as the history of much more popular generative models like DALL-E, Midjourney and Stable Diffusion shows, and even more so with large language models (ChatGPT anybody?), similar technologies may well appear in the public domain in the foreseeable future. This is confirmed by a recent leak of internal Google correspondence in which representatives of the internet giant fear they’ll lose the AI race to open solutions. This will obviously result in an increase in the use of voice deepfakes – including for fraud.

The most promising step in the development of deepfakes is real-time generation, which will ensure the explosive growth of deepfakes (and fraud based on them). Can you imagine a video call with someone whose face and voice are completely fake? However, this level of data processing requires huge resources only available to large corporations, so the best technologies will remain private and fraudsters won’t be able to keep up with the pros. The high quality bar will also help users learn how to easily identify fakes.

How to protect yourself

Now back to our very first question: can we trust the voices we hear (that is – if they’re not the voices in our head)? Well, it’s probably overdoing it if we’re paranoid all the time and start coming up with secret code words to use with friends and family; however, in more serious situations such paranoia might be appropriate. If everything develops based on the pessimistic scenario, deepfake technology in the hands of scammers could grow into a formidable weapon in the future, but there’s still time to get ready and build reliable methods of protection against counterfeiting: there’s already a lot of research into deepfakes, and large companies are developing security solutions. In fact, we’ve already talked in detail about ways to combat video deepfakes here.

For now, protection against AI fakes is only just beginning, so it’s important to keep in mind that deepfakes are just another kind of advanced social engineering. The risk of encountering fraud like this is small, but it’s still there, so it’s worth knowing and keeping in mind. If you get a strange call, pay attention to the sound quality. Is it in an unnatural monotone, is it unintelligible, or are there strange noises? Always double-check information through other channels, and remember that surprise and panic are what scammers rely on most.

deepfakes

deepfakes

Tips

Tips