In early August 2021, Apple unveiled its new system for identifying photos containing images of child abuse. Although Apple’s motives — combating the dissemination of child pornography — seem indisputably well-intentioned, the announcement immediately came under fire.

Apple has long cultivated an image of itself as a device maker that cares about user privacy. New features anticipated for iOS 15 and iPadOS 15 have already dealt a serious blow to that reputation, but the company is not backing down. Here’s what happened and how it will affect average users of iPhones and iPads.

What is CSAM Detection?

Apple’s plans are outlined on the company’s website. The company developed a system called CSAM Detection, which searches users’ devices for “child sexual abuse material,” also known as CSAM.

Although “child pornography” is synonymous with CSAM, the National Center for Missing and Exploited Children (NCMEC), which helps find and rescue missing and exploited children in the United States, considers “CSAM” the more appropriate term. NCMEC provides Apple and other technology firms with information on known CSAM images.

Apple introduced CSAM Detection along with several other features that expand parental controls on Apple mobile devices. For example, parents will receive a notification if someone sends their child a sexually explicit photo in Apple Messages.

The simultaneous unveiling of several technologies resulted in some confusion, and a lot of people got the sense that Apple was now going to monitor all users all the time. That’s not the case.

CSAM Detection rollout timeline

CSAM Detection will be part of the iOS 15 and iPadOS 15 mobile operating systems, which will become available to users of all current iPhones and iPads (iPhone 6S, fifth-generation iPad and later) this autumn. Although the function will theoretically be available on Apple mobile devices everywhere in the world, for now the system will work fully only in the United States.

How CSAM Detection will work

CSAM Detection works only in conjunction with iCloud Photos, which is the part of the iCloud service that uploads photos from a smartphone or tablet to Apple servers. It also makes them accessible on the user’s other devices.

If a user disables photo syncing in the settings, CSAM Detection stops working. Does that mean photos are compared with those in criminal databases only in the cloud? Not exactly. The system is deliberately complex; Apple is trying to guarantee a necessary level of privacy.

As Apple explains, CSAM Detection works by scanning photos on a device to determine whether they match photos in NCMEC’s or other similar organizations’ databases.

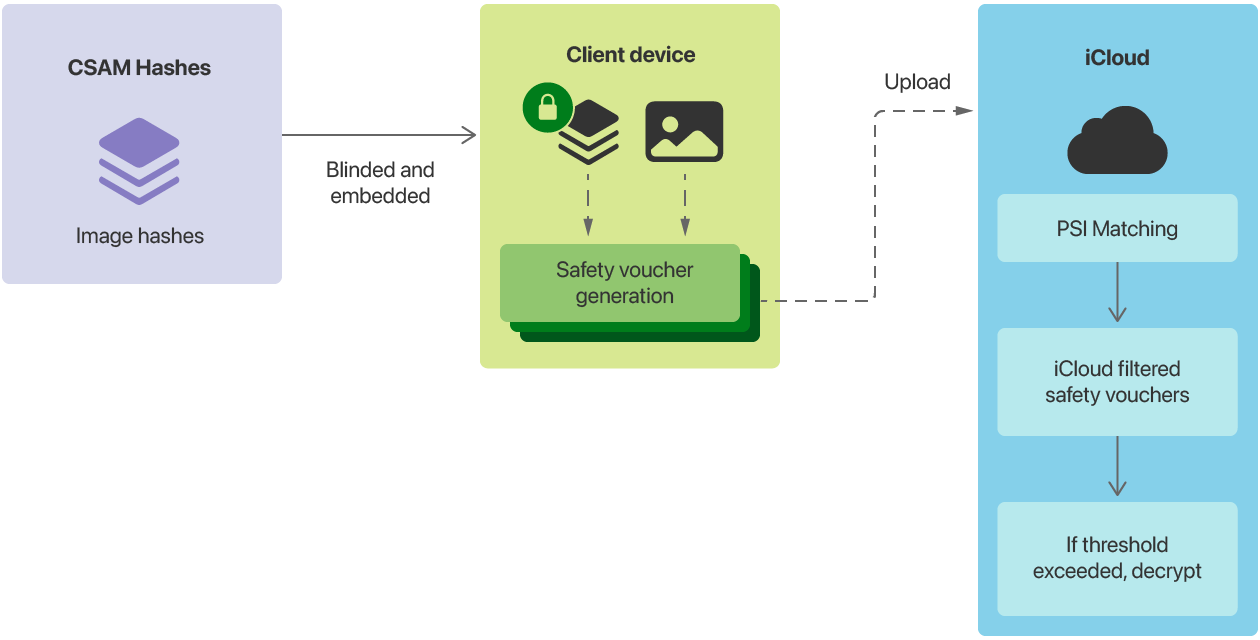

Simplified diagram of how CSAM Detection works. Source

The detection method uses NeuralHash technology, which in essence creates digital identifiers, or hashes, for photos based on their contents. If a hash matches one in the database of known child-exploitation images, then the image and its hash are uploaded to Apple’s servers. Apple performs another check before officially registering the image.

Another component of the system, cryptographic technology called private set intersection, encrypts the results of the CSAM Detection scan such that Apple can decrypt them only if a series of criteria are met. In theory, that should prevent the system from being misused — that is, it should prevent a company employee from abusing the system or handing over images at the request of government agencies.

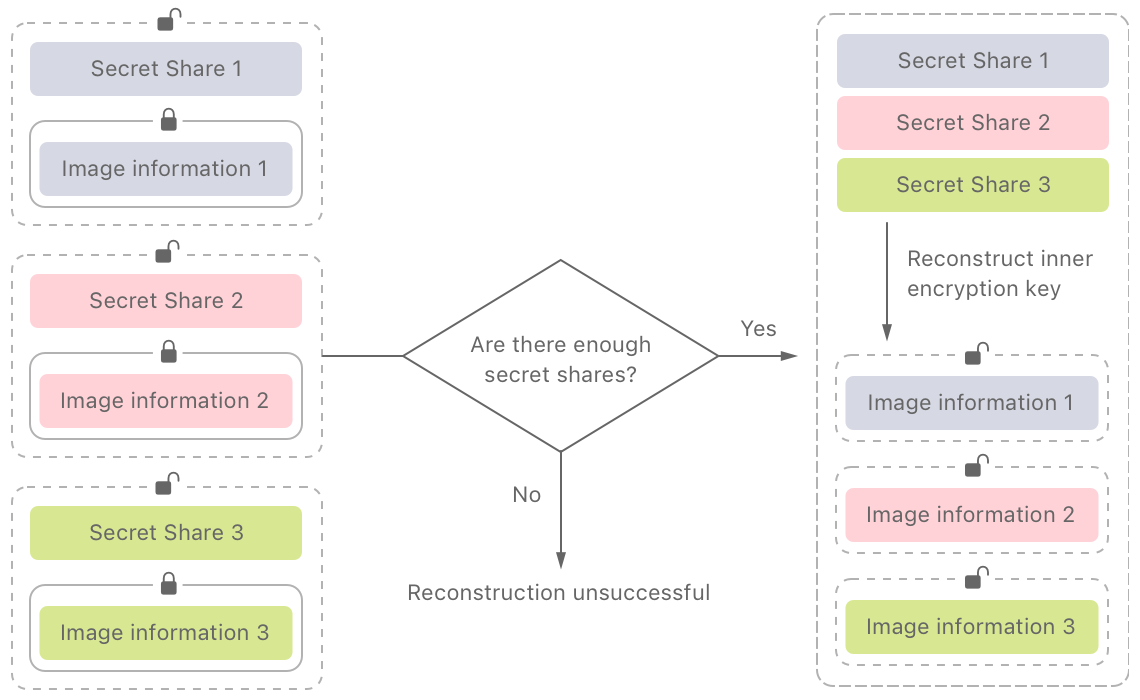

In an August 13 interview with the Wall Street Journal, Craig Federighi, Apple’s senior vice president of software engineering, articulated the main safeguard for the private set intersection protocol: To alert Apple, 30 photos need to match images in the NCMEC database. As the diagram below shows, the private set intersection system will not allow the data set — information about the operation of CSAM Detection and the photos — to be decrypted until that threshold is reached. According to Apple, because the threshold for flagging an image is so high, a false match is very unlikely — a “one in a trillion chance.”

An important feature of CSAM Detection system: to decrypt data, a large number of photos need to match. Source

What happens when the system is alerted? An Apple employee manually checks the data, confirms the presence of child pornography, and notifies authorities. For now the system will work fully only in the United States, so the notification will go to NCMEC, which is sponsored by the US Department of Justice.

Problems with CSAM Detection

Potential criticism of Apple’s actions falls into two categories: questioning the company’s approach and scrutinizing the protocol’s vulnerabilities. At the moment, there is little concrete evidence that Apple made a technical error (an issue we will discuss in more detail below), although there has been no shortage of general complaints.

For example, the Electronic Frontier Foundation has described these issues in great detail. According to the EFF, by adding image scanning on the user side, Apple is essentially embedding a back door in users’ devices. The EFF has criticized the concept since as early as 2019.

Why is that a bad thing? Well, consider having a device on which the data is completely encrypted (as Apple asserts) that then begins reporting to outsiders about that content. At the moment the target is child pornography, leading to a common refrain, “If you’re not doing anything wrong, you have nothing to worry about,” but as long as such a mechanism exists, we cannot know that it won’t be applied to other content.

Ultimately, that criticism is political more than technological. The problem lies in the absence of a social contract that balances security and privacy. All of us, from bureaucrats, device makers, and software developers to human-rights activists and rank-and-file users — are trying to define that balance now.

Law-enforcement agencies complain that widespread encryption complicates collecting evidence and catching criminals, and that is understandable. Concerns about mass digital surveillance are also obvious. Opinions, including opinions about Apple’s policies and actions, are a dime a dozen.

Potential problems with implementing CSAM Detection

Once we move past ethical concerns, we hit some bumpy technological roads. Any program code produces new vulnerabilities. Never mind what governments might do; what if a cybercriminal took advantage of CSAM Detection’s vulnerabilities? When it comes to data encryption, the concern is natural and valid: If you weaken information protection, even if it’s with only good intentions, then anyone can exploit the weakness for other purposes.

An independent audit of the CSAM Detection code has just begun and could take a very long time. However, we have already learned a few things.

First, code that makes it possible to compare photos against a “model” has existed in iOS (and macOS) since version 14.3. It is entirely possible that the code will be part of CSAM Detection. Utilities for experimenting with a search algorithm for matching images have already found some collisions. For example, according to Apple’s NeuralHash algorithm, the two images below have the same hash:

According to Apple’s NeuralHash algorithm, these two photos match. Source

If it is possible to pull out the database of hashes of illegal photos, then it is possible to create “innocent” images that trigger an alert, meaning Apple could receive enough false alerts to make CSAM Detection unsustainable. That is most likely why Apple separated the detection, with part of the algorithm working only on the server end.

There is also this analysis of Apple’s private set intersection protocol. The complaint is essentially that even before reaching the alert threshold, the PSI system transfers quite a bit of information to Apple’s servers. The article describes a scenario in which law-enforcement agencies request the data from Apple, and it suggests that even false alerts might lead to a visit from the police.

For now, the above are just initial tests of an external review of CSAM Detection. Their success will depend largely on the famously secretive company providing transparency into CSAM Detection’s workings — and in particular, its source code.

What CSAM Detection means for the average user

Modern devices are so complex that it is no easy feat to determine how secure they really are — that is, to what extent they live up to the maker’s promises. All most of us can do is trust — or distrust — the company based on its reputation.

However, it is important to remember this key point: CSAM Detection operates only if users upload photos to iCloud. Apple’s decision was deliberate and anticipated some of the objections to the technology. If you do not upload photos to the cloud, nothing will be sent anywhere.

You may remember the notorious conflict between Apple and the FBI in 2016, when the FBI asked Apple for help unlocking an iPhone 5C that belonged to a mass shooter in San Bernardino, California. The FBI wanted Apple to write software that would let the FBI get around the phone’s password protection.

The company, recognizing that complying could result in unlocking not only the shooter’s phone but also anyone’s phone, refused. The FBI backed off and ended up hacking the device with outside help, exploiting the software’s vulnerabilities, and Apple maintained its reputation as a company that fights for its customers’ rights.

However, the story isn’t quite that simple. Apple did hand over a copy of the data from iCloud. In fact, the company has access to practically any user data uploaded to the cloud. Some, such as Keychain passwords and payment information, is stored using end-to-end encryption, but most information is encrypted only for protection from unsanctioned access — that is, from a hack of the company’s servers. That means the company can decrypt the data.

The implications make for perhaps the most interesting plot twist in the story of CSAM Detection. The company could, for example, simply scan all of the images in iCloud Photos (as Facebook, Google, and many other cloud service providers do). Apple created a more elegant mechanism that would help it repel accusations of mass user surveillance, but instead, it drew even more criticism — for scanning users’ devices.

Ultimately, the hullabaloo hardly changes anything for the average user. If you are worried about protecting your data, you should look at any cloud service with a critical eye. Data you store only on your device is still safe. Apple’s recent actions have sown well-founded doubts. Whether the company will continue in this vein remains an open question.

iOS

iOS

Tips

Tips